Creating an AI Partnership Powered by Ethics

It’s important that you are transparent about how the information you are submitting, publishing, or sharing was obtained. Have a tagline in mind so audiences are aware. Mine is: AI Sous-Chef.

I had a nice new year shout-out from Tech Cabal on the film Ndoto I co-produced with Mathew Miguel Cullen of Mirada Studios. Please read about how we created the world’s first AI film on climate action in Africa here.

As a learner-builder, I spend a significant amount of time testing new platforms to assess their true value in my life and in communications. Refined and more powerful versions of these tools will eventually be integrated into your workflow and enable efficiency and productivity.

AI is not going to replace humans, but humans with AI are going to replace humans without AI - Professor Karim Lakhani, Harvard Business School.

Karim Lakhani

There are, of course, many challenges. Front of mind: ethics. Here’s my really smart brother, Irfan Verjee, an AI guy, on ethics and you.

The Rundown will soon be offering a series of practical learning videos to paid subscribers.

Every day I ground myself with the following reminders because they frame an ethical approach and awareness of all the new tools I work with.

Transparency

When I derive any information that I am posting or submitting with any support from an AI, I am transparent about it. If you use ChatGPT and do not tell people you are using it, you’re lying about the creation of your work. It’s important that we tell people how the information was obtained. Have a tagline in mind so audiences are aware. Mine is: AI Sous-Chef.

AI Sous-Chef: MidJourney

Here are some other ways you can flag your AI-assisted work: Claude from Anthropic suggested these for your consideration:

- Written by Human, Co-Created with Machine

- Human Plus Bot

- AI-Assisted Article

- Co-Authored with Claude

- Enhanced by Generative Intelligence

Bias

AI systems can perpetuate and amplify unfair or discriminatory outcomes in areas such as hiring, lending, and criminal justice due to the societal biases ingrained in the data used to train these systems - Claude, AI Sous-Chef

All AI has data sets. Those are biased. Those have been baked in. Data has lenses that are western, discriminatory, or partial. There are limited frames of reference. There’s limited temporal information. There's a lack of diversity in the data sets that have been trained on all current AI. So awareness of this is essential. It also means that we need to apply our own critical thinking to the results we receive from a prompt. You do not want to be flogging someone else’s incorrect information or sharing poisoned data without questioning it.

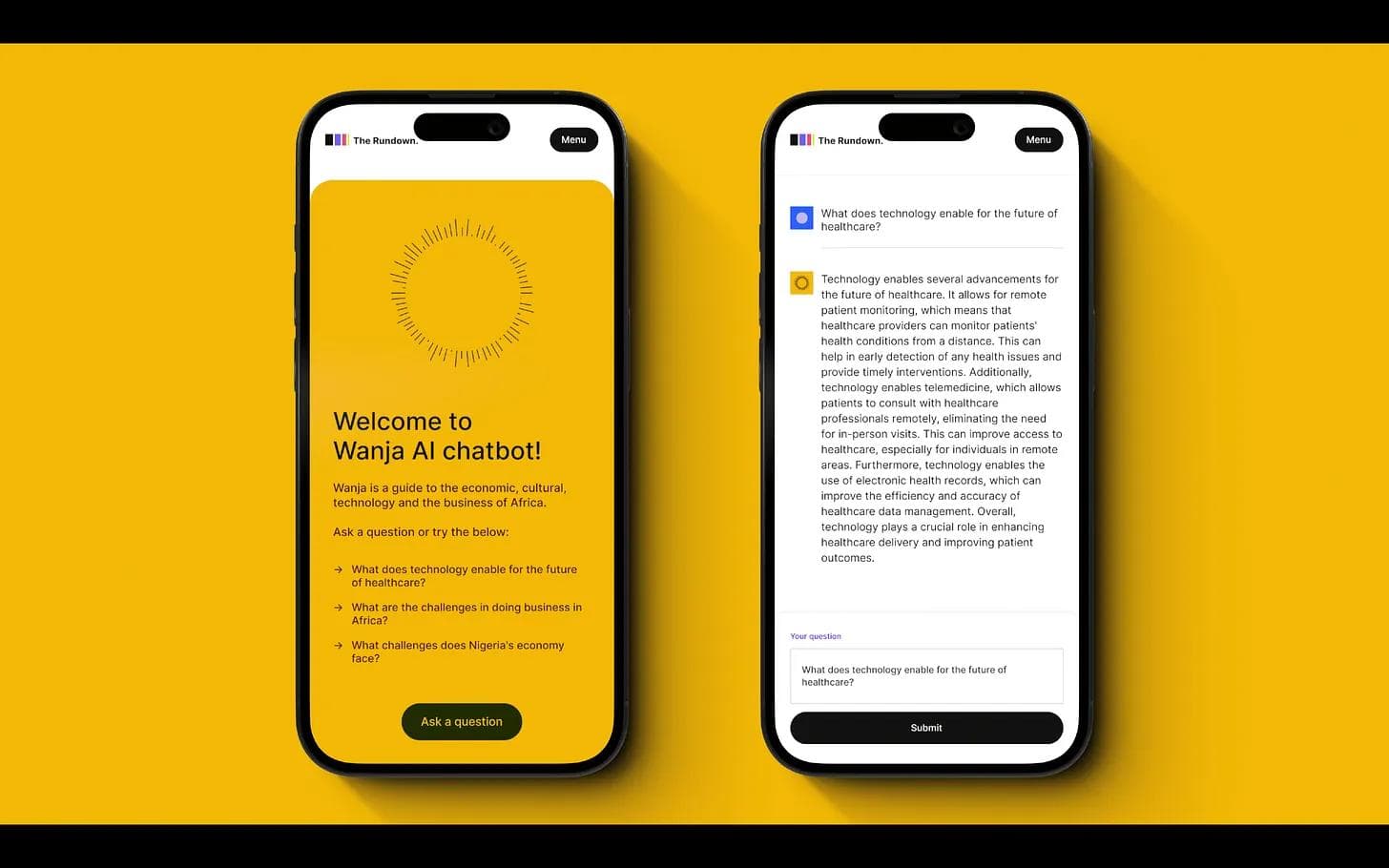

This is also why we at therundown.studio are developing our chatbot, Wanja. She is using our trusted Africa data sets to help you understand Africa from the POV of Africans. Please help beta-test her.

Also, the more a term is searched on a large language model (LLM), the AI is trained to bring the popular answer to that query to the top because of the volume of people searching for the same information. Future information can be determined that way. This means there’s a danger of data degradation, and the AI drops off other key pieces of information.

Security

You need to be aware of these risks. I spoke to a human security expert, Candyce Kelshall about this. As we share our mindset, our prompts, and our data, how we think of something may not belong to us any more. It’s being harvested from others to give us perspective. Here are some key themes to consider:. We will be deep-diving at a later date. AI systems need personal data to function well, so what are the privacy risks with AI models? How long has the data been collected and stored? What will it ultimately be used for? Will it be used beyond its originally intended purpose? Machines can also infer sensitive information about a person based on questions asked, such as political views or health status. Your personal data could be used to help a machine make decisions, and you may not be aware of it. What about the unintended exposure of your personal data? - Perplexity AI Sous Chef

I asked Claude AI to comment on how it was using my personal data. This was the response:

As an AI assistant created by Anthropic to be helpful, harmless, and honest, I do not actually collect or store any of your personal data. Anthropic has designed their AI assistant named Claude (that's me!) using a technique called Constitutional AI to align systems with ethical values like privacy. When you chat with me, any information you provide is processed in an anonymous way to generate responses. I synthesize what you share with my broader training to craft relevant replies. But nothing relating to you as an individual user is leveraged or retained in any database.

AI Sous-Chefs included: Claude, Quillbot, MidJourney, and Perplexity AI.

Takeaway:

Understand the nature of the AI you are using. Declare your usage and ensure your work is more ethically sound.

Interested to learn more?

Sign up today to get notified on the future of communication and AI

More from the blog

My Top 10 List of the Best AI Teachers and Tools

I love lists. I love checklists. Here are some of the teachers, tips, and tools I use. I hope you find it helpful.

My Journey With Midjourney Version Six --V 6

I've had to relearn prompts for Midjourney. Less Words. More chatty. More direct. The bottom line is that it's significantly better. These images super realistic.

Bull Run, Crypto Outlook 2024

Some key trends to be aware of in the crypto space and other thoughts on the future of BTC and my crypto life

Mistral AI And The Wow Factor With A Mixture of Experts

With the hype of Google's Gemini and most recently Mistral AI, I try to wade through the technical speak for non techies to understand what is MoE and why is Mistral making crazy buzz.