RAG, Three Letters and a Big Difference. Huge.

Retrieval-augmented generation (RAG) is an artificial intelligence framework that retrieves data from external sources of knowledge to improve the quality of responses (source: techtarget)

There's a buzzword in AI circles today and it's RAG. It stands for Retrieval Augmented Generation. (I'm really learning a lot of new mouthfuls).

Large Language Models out there today are powerful. Complex prompt engineering and fine tuning has made these models highly evolved. Now think of RAG as a pretty easy way to supercharge an LLM and update it with more updated data or data that you own.

See, there are two main problems with Large Language Models. First, the information could be out of date, and second, there's no source. The LLM responds so well to a query, we can be easily convinced of its accuracy.

The idea of RAG works like this. There's no need to retrain the entire model (Bard, Chat GPT), you can simply add a new content store to augment the existing info.

Practically that would look like this.

The user prompts the model with a question. That query is sent first to the new primary store where the latest content exists. This external content store can essentially add new information, source it correctly, and ensure it's up to date. After my instruction, I would get the updated and well sourced response, that's shows some sort of evidence base.

I need to make sure the new content sore I create is pretty accurate, and quality, otherwise, the user will get either "I don't know" from the RAG or revert to information on the LLM that may not be great.

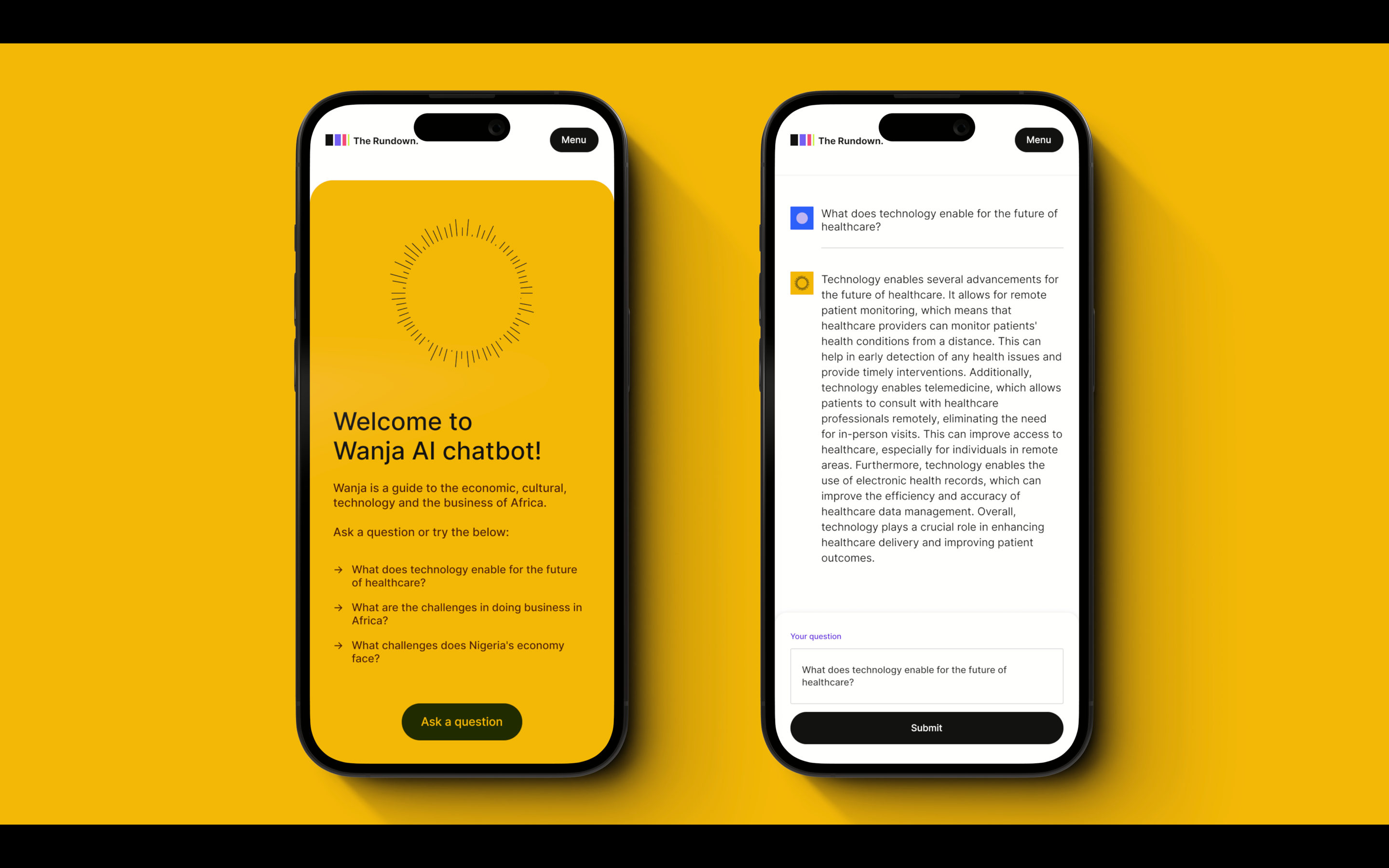

The best way to understand it is by doing it. So our team is in action! The Rundown team is planning to build a comms assistant for users, called Alisha, that can work with you as you take our courses. We do not have enough of a volume of original coursework at the moment.

So we are testing the information we have accrued over the years on business in Africa. We have called this BOT, Wanja. Wanja is awesome.

I will be learning the skills of a prompt engineer and it's both exciting and intimidating. I do believe comms leaders of the future are going to be structuring teams totally differently, and newsrooms especially will need to be thinking fast, of the skills sets required for success, trust and new business models.

Interested to learn more?

Sign up today to get notified on the future of communication and AI

More from the blog

A New Type of Journalist

Journalists who use AI will replace journalists who do not. These new types of journalists will be faster at getting to new stories, more accurate, more balanced.

Your Body, Your Language

Body language plays a significant role in connecting with people, and how we come across in public. How can you ensure you connect with people, in 3 seconds. That's all you've got.

Is It Real or Fake?

What kind of technologies are being considered and tested in order to figure out what is real or not: pictures, voices, video. It's a jungle. Here's one idea being developed by Content Credentials.

Be a Detective

How can we know what's real and what's not? How can we make sure we're not fooled? Here are a few tips.